Here’s how I went about running scheduled jobs using AWS Event Bridge and Fargate in ECS. Yes, there are many ways to skin this cat. This is just one.

For context, the app that needed scheduled tasks is using FastAPI. You can use a library for repeated tasks (i.e. “every 5 minutes…”), but I needed something that would run more like a cron job at a specific time (i.e. “at midnight every night…”). I also didn’t want my scheduled tasks to take resources within the context of the ECS service running my user-facing API.

The next constraint (that ended up making things a little easier) is that I didn’t want to maintain multiple code bases for my API and my scheduled tasks. I wanted to keep things simple and have the option to reuse code between the two functions — like ORM, for example. I also come from the world of Rails, and this approach is common with tools like Sidekiq. Or, if you Python, Django and Celery/Celery Beat. The challenge in my situation is that I wanted to keep infrastructure as lightweight as possible, so standing up EC2 instances to support something like Celery Beat wasn’t what I was looking for.

Concept

- Continue to run my API as-is as a Fargate-based task in ECS.

- Create AWS Event Bridge rules that are cron-based and spin up short-lived Fargate tasks running the same container as my API, but with a different command sent to the container.

- Code to be run in the scheduled tasks follows a convention to make dynamic command/entry point easy.

- Task schedule config is handled by a JSON file in the app.

Convention

The first thing I set about was creating a convention that made starting my scheduled tasks easy. The file structure I set up looks something like this:

/app

|__tasks

|__ data_ingestion_task.py

|__ spam_task.py.pyEach one of those task python files has a run() method that takes no arguments. So now if I want to run the spam_task, I can simply execute…

python -c "from app.tasks import spam_task; spam_task.run()"Cool. So now I can update the bash script that I execute to start my container to look something like…

# I use pipenv. You do you.

if [ ! -z "$1" ]; then

pipenv run python -c "from app.tasks import $1; $1.run()"

else

pipenv run uvicorn app.main:app --host 0.0.0.0

fiWhat that allows me to do is to specify a different command in my ECS task definition when I’m creating it. For my API, it’s simply ["./bin/start"], and for, say, the spam_task it’s ["./bin/start","spam_task"]. The start script looks for an argument. If it’s there, the assumption is that you’re trying to run a “task”. If it’s not, it assumes you’re trying to start the web server.

Configuration

Now that I have a way to run scheduled tasks (locally at least), I need a way to specify what’s needed for creating the “rule” in AWS Event Bridge. I did that with a simple JSON file,/app/scheduled_tasks.json. It looks like this:

{

"tasks": [

{

"name": "IngestData",

"task": "data_ingestion_task",

"cron": "0 6 * * ? *"

}

]

}This gives us a simple way to specify scheduled tasks and all the data we need to automate the creation of the AWS Event Bridge “rules” and their “targets”. “Rules” tell Event Bridge when things should happen. “Targets” tell it what to do. In our case, the “target” is always a revision of a Fargate task definition with the command we need to run our task. For the sake of keeping sprawl to a minimum, I have a single ECS task definition that gets used for all Event Bridge targets as well as my API. Each one of those targets/services gets a specific revision with the necessary command for starting the container.

And that brings us to deployment.

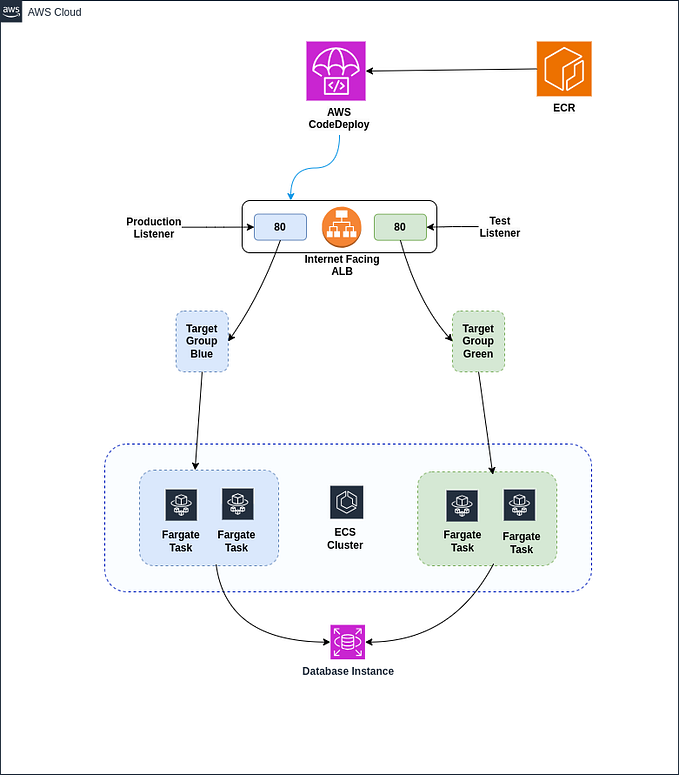

Deployment

Now it’s time to bring this all together. This is where it gets a little involved. I’ll show everything using AWS CLI, but you could easily do this with whatever abstraction you want to apply. The basic flow goes like this:

- Merge new code in Github.

- Have the build server run tests, build the new version of the container, push the container to AWS ECR, and then deploy with the following steps…

- Delete all the current scheduled tasks.

- Loop over the tasks in

scheduled_tasks.jsonand create new Event Bridge rules and targets that point to new revisions of our ECS task definition using the latest container. - Create a final new revision of our ESC task definition to run our API and update the ECS service that runs it to point to that revision.

Below are the details of that deployment script. First, get some basics out of the way…

# function used to strip quotes in parsing

function dequote() {

sed -e 's/^"//' -e 's/"$//' <<< $1

}set -e

# define some variables

ECS_CLUSTER=your-cluster

SERVICE_NAME=your-service

HASH="$(git rev-parse HEAD)" # we use this for versioning

TASK_FAMILY=your-task

ECR_IMAGE="123456.dkr.ecr.us-east-1.amazonaws.com/foobar:$HASH"# grab the json task definition of what's currently running

TASK_DEFINITION=$(aws ecs describe-task-definition --task-definition "$TASK_FAMILY" --region us-east-1)

Now let’s get rid of current Event Bridge rules and targets…

# list all current tasks

RULES=$(aws events list-rules --name-prefix "your__prefix" | jq '.Rules[].Name')# delete all scheduled tasks

IFS=$'\n'

SPLIT_RULES=($RULES)

for rule in "${SPLIT_RULES[@]}"; do

rule=$(dequote $rule)

# get the targets

targets=$(aws events list-targets-by-rule --rule $rule | jq '.Targets[].Id')

split_targets=($targets)

#remove the targets

for target in "$split_targets"; do

target=$(dequote $target)

echo "Removing target $target for rule $rule..."

aws events remove-targets --rule $rule --ids $target

done

echo "Deleting rule $rule..."

aws events delete-rule --name $rule

done

Excellent. Now let’s take a look at our config for scheduled tasks and create new rules and targets…

i=0

task=$(jq --argjson I $i '.tasks[$I]' ./app/scheduled_tasks.json)# for each task in config:

while [ "$task" != null ]

do

# extract config

name=$(jq --argjson I $i '.tasks[$I].name' ./app/scheduled_tasks.json)

name=$(dequote $name)

file=$(jq --argjson I $i '.tasks[$I].task' ./app/scheduled_tasks.json)

file=$(dequote $file)

cron=$(jq --argjson I $i '.tasks[$I].cron' ./app/scheduled_tasks.json)

cron=$(dequote $cron) # create task revision with appropriate command

NEW_TASK_DEFINTIION=$(echo $TASK_DEFINITION | jq --arg IMAGE "$ECR_IMAGE" --arg CMD "$file" '.taskDefinition | .containerDefinitions[0].image = $IMAGE | .containerDefinitions[0].command = ["./bin/start", $CMD] | del(.taskDefinitionArn) | del(.revision) | del(.status) | del(.requiresAttributes) | del(.compatibilities) | del(.registeredAt) | del(.registeredBy)')

NEW_TASK_INFO=$(aws ecs register-task-definition --region us-east-1 --cli-input-json "$NEW_TASK_DEFINTIION")

# grab the revision number for the target

NEW_REVISION=$(echo $NEW_TASK_INFO | jq '.taskDefinition.revision') # create event bridge rule pointing to that task revision

hush=$(aws events put-rule --schedule-expression "cron($cron)" --name "your__prefix__$name") # create the JSON describing the scheduled event's target

# this json template file is below

target_template=$(jq '.' ./bin/scheduled_task_definition.json)

cluster=$(aws ecs describe-clusters --cluster your-cluster)

cluster_arn=$(echo $cluster | jq '.clusters[0].clusterArn')

cluster_arn=$(dequote $cluster_arn)

task_arn=$(echo $NEW_TASK_INFO | jq '.taskDefinition.taskDefinitionArn')

task_arn=$(dequote $task_arn)

target=$(echo $target_template | jq --arg name "your__prefix__rule_target__$name" --arg CLUSTER_ARN "$cluster_arn" --arg TASK_ARN "$task_arn" '.[0].Id = $name | .[0].Arn = $CLUSTER_ARN | .[0].EcsParameters.TaskDefinitionArn = $TASK_ARN')

hush=$(aws events put-targets --rule "staging__discovery__$name" --targets "$target")

echo "Created rule and target for staging__discovery__$name." #iterate

i=$((i+1))

task=$(jq --argjson I $i '.tasks[$I]' ./app/scheduled_tasks.json)

done

The key part from above is…

.containerDefinitions[0].command = [“./bin/start”, $CMD]Also, as promised in the snipit, here’s the scheduled_task_definition.json file:

[{

"Id": "[arbitrary id]",

"Arn": "[cluster arn]",

"RoleArn": "arn:aws:iam::123456:role/ecsEventsRole",

"EcsParameters": {

"TaskDefinitionArn": "[passed back from task creation]",

"TaskCount": 1,

"LaunchType": "FARGATE",

"NetworkConfiguration": {

"awsvpcConfiguration": {

"Subnets": [

"subnet-123",

"subnet-4556"

],

"SecurityGroups": ["sg-987"],

"AssignPublicIp": "ENABLED"

}

},

"PlatformVersion": "LATEST"

}

}]The RoleArn from above is worth noting. It’s important that the role you provide here has the appropriate permissions as outlined in this article. It took some time debugging “failed invocations” of our test rule before we figured out that it was a permissions issue.

OK, now that we’ve re-created all the rules that invoke scheduled tasks, we create one more revision of our task definition to run our API and update the ECS service that runs it…

echo "Deploying API..."

NEW_TASK_DEFINTIION=$(echo $TASK_DEFINITION | jq --arg IMAGE "$ECR_IMAGE" '.taskDefinition | .containerDefinitions[0].image = $IMAGE | .containerDefinitions[0].portMappings[0].containerPort = 8000 | .containerDefinitions[0].portMappings[0].hostPort = 8000 | .containerDefinitions[0].portMappings[0].protocol = "tcp" | .containerDefinitions[0].command = ["./bin/start"] | del(.taskDefinitionArn) | del(.revision) | del(.status) | del(.requiresAttributes) | del(.compatibilities) | del(.registeredAt) | del(.registeredBy)')NEW_TASK_INFO=$(aws ecs register-task-definition --region us-east-1 --cli-input-json "$NEW_TASK_DEFINTIION")NEW_REVISION=$(echo $NEW_TASK_INFO | jq '.taskDefinition.revision')aws ecs update-service --cluster ${ECS_CLUSTER} --service ${SERVICE_NAME} --task-definition ${TASK_FAMILY}:${NEW_REVISION} --region us-east-1

Conclusion

This is one way you could accomplish scheduling tasks. The reason I like this approach is because it uses very few resources — it takes what it needs for just as long as it needs it and then goes away until next time. In case there was any doubt before, I’m a total nerd. I loved the process of creating a framework that is equal parts code and infrastructure. Hopefully this will be useful to somebody out there.

![AWS Terraform [2024]: ECS Cluster on Autoscaling EC2 with RDS DB - a fully CloudNative approach](https://miro.medium.com/v2/resize:fit:679/1*oFw6CENBoDEFt_Yh4L8NmA.png)